AI poisoning is a serious threat to machine learning models, and it's essential to understand the risks involved. AI poisoning occurs when malicious data is fed into a model, causing it to produce inaccurate or biased results.

This can happen in various ways, including data poisoning, model poisoning, and backdoor attacks. Data poisoning involves inserting malicious data into a training dataset, while model poisoning involves modifying a model's architecture or weights to produce incorrect results.

The consequences of AI poisoning can be severe, including financial losses, reputational damage, and even physical harm. For example, a study found that a poisoned model can lead to a 20% reduction in accuracy.

To mitigate AI poisoning, it's crucial to implement robust security measures, such as data validation and model monitoring. This can help detect and prevent malicious data from being fed into a model.

If this caught your attention, see: Energy-based Model

What Is

Data poisoning is a type of attack that involves the deliberate contamination of data to compromise the performance of AI and ML systems.

This type of attack strikes at the training phase, where adversaries introduce, modify, or delete selected data points in a training dataset to induce biases, errors, or specific vulnerabilities.

AI models are inherently vulnerable to data poisoning because they're trained on massive datasets, making it difficult for developers to thoroughly review all the data for malicious content.

Data poisoning can cause AI models to generate harmful, misleading, or incorrect outputs, which can have serious consequences.

The scale of data required to train AI models makes it easy for external actors to access and manipulate the data, which is often sourced from public web pages or user inputs.

Attackers can exploit this vulnerability to compromise AI models, as seen in the case of Microsoft's Tay chatbot, which learned to generate harmful content from racist comments and obscenities in user posts.

Types of Attacks

There are several types of data poisoning attacks that can compromise the security of AI systems. Malicious actors use a variety of methods to execute these attacks, including mislabeling portions of the AI model's training data set.

Mislabeling attacks involve deliberately mislabeling portions of the training data set, leading the model to learn incorrect patterns and thus give inaccurate results after deployment. For example, feeding a model numerous images of horses incorrectly labeled as cars during the training phase might teach the AI system to mistakenly recognize horses as cars after deployment.

The most common approaches to data poisoning attacks include label poisoning, training data poisoning, model inversion attacks, and stealth attacks. Label poisoning involves injecting mislabeled or malicious data into the training set to influence the model's behavior during inference. Training data poisoning involves modifying a significant portion of the training data to influence the AI model's learning process.

Here are some of the most common types of data poisoning attacks:

- Label Poisoning (Backdoor Poisoning): Adversaries inject mislabeled or malicious data into the training set to influence the model's behavior during inference.

- Training Data Poisoning: In training data poisoning, the attacker modifies a significant portion of the training data to influence the AI model's learning process.

- Model Inversion Attacks: In model inversion attacks, adversaries exploit the AI model's responses to infer sensitive information about the data it was trained on.

- Stealth Attacks: In stealth attacks, the adversary strategically manipulates the training data to create vulnerabilities that are difficult to detect during the model's development and testing phases.

These attacks can have serious consequences, particularly when AI systems are used in critical applications such as autonomous vehicles, medical diagnosis, or financial systems.

Broaden your view: Ai Is the Theory and Development of Computer Systems

Mechanism of

Data poisoning attacks can be broadly categorized into two types: targeted attacks and nontargeted attacks. Targeted attacks aim to influence the model's behavior for specific inputs without degrading its overall performance, such as training a facial recognition system to misclassify a particular individual's face.

The success of data poisoning hinges on three critical components: stealth, efficacy, and consistency. Stealth is crucial because the poisoned data should not be easily detectable to escape any data-cleaning or pre-processing mechanisms.

Direct data poisoning attacks, also known as targeted attacks, occur when threat actors manipulate the ML model to behave in a specific way for a particular targeted input, while leaving the model's overall performance unaffected. This can happen when threat actors inject carefully crafted samples into the training data of a malware detection tool to cause the ML system to misclassify malicious files as benign.

In targeted attacks, the goal is to cause the model to misbehave for specific inputs, rather than degrading its overall performance. For example, an attacker might train a facial recognition system to misclassify a particular individual's face.

Here are the three components that determine the success of data poisoning attacks:

- Stealth: The poisoned data should not be easily detectable to escape any data-cleaning or pre-processing mechanisms.

- Efficacy: The attack should lead to the desired degradation in model performance or the intended misbehavior.

- Consistency: The effects of the attack should consistently manifest in various contexts or environments where the model operates.

Attack Types

Data poisoning attacks can be broadly categorized into several types, each with its own unique characteristics and goals. Malicious actors use a variety of methods to execute these attacks, including mislabeling data, modifying training data, and exploiting model vulnerabilities.

One common type of data poisoning attack is mislabeling, where a threat actor deliberately mislabels portions of the AI model's training data set, leading the model to learn incorrect patterns and thus give inaccurate results after deployment. This can be done by feeding a model numerous images of horses incorrectly labeled as cars, for example.

Another type of data poisoning attack is label poisoning, also known as backdoor poisoning. Adversaries inject mislabeled or malicious data into the training set to influence the model's behavior during inference. This type of attack can be particularly difficult to detect, as the poisoned data may appear legitimate at first glance.

Data poisoning attacks can also be categorized into targeted and nontargeted attacks. Targeted attacks aim to influence the model's behavior for specific inputs without degrading its overall performance. Nontargeted attacks, on the other hand, aim to degrade the model's overall performance by adding noise or irrelevant data points.

Here are some common types of data poisoning attacks:

- Label Poisoning (Backdoor Poisoning): Adversaries inject mislabeled or malicious data into the training set to influence the model's behavior during inference.

- Training Data Poisoning: Adversaries modify a significant portion of the training data to influence the AI model's learning process.

- Model Inversion Attacks: Adversaries exploit the AI model's responses to infer sensitive information about the data it was trained on.

- Stealth Attacks: Adversaries strategically manipulate the training data to create vulnerabilities that are difficult to detect during the model's development and testing phases.

These types of attacks pose a significant threat to the reliability, trustworthiness, and security of AI systems, particularly when these systems are used in critical applications such as autonomous vehicles, medical diagnosis, or financial systems.

Attack Tools and Techniques

Attack tools and techniques are becoming increasingly sophisticated, making it easier for malicious actors to execute data poisoning attacks.

The Nightshade AI poisoning tool, developed by a team at the University of Chicago, enables digital artists to subtly modify the pixels in their images before uploading them online. This tool was originally designed to preserve artists' copyrights by preventing unauthorized use of their work, but it could also be abused for malicious activities.

Attackers use various methods to execute data poisoning attacks, including mislabeling, data injection, and data manipulation.

Data poisoning techniques generally fall into one of two categories, with some attacks targeting specific model functionality while others are more chaotic, manipulating anything an adversary can access.

Some common data manipulation techniques include adding incorrect data, removing correct data, and injecting adversarial samples. These techniques can cause the AI system to misclassify data or behave in a predefined malicious manner in response to specific inputs.

Attackers can use data poisoning to manipulate AI systems, including those used for generating deep fakes. This can lead to deceptive deep fakes that have serious privacy and identity implications.

Here's a breakdown of some common data poisoning techniques:

- Mislabeling: Threat actors deliberately mislabel portions of the AI model's training data set, leading the model to learn incorrect patterns.

- Data injection: Threat actors inject malicious data samples into ML training data sets to make the AI system behave according to the attacker's objectives.

- Data manipulation: Techniques for manipulating training data include adding incorrect data, removing correct data, and injecting adversarial samples.

Vulnerabilities and Risks

LLM applications are vulnerable to traditional third-party package vulnerabilities, similar to "A06:2021 – Vulnerable and Outdated Components", but with increased risks when components are used during model development or finetuning.

Attackers can exploit outdated or deprecated components to compromise LLM applications. This highlights the importance of keeping components up-to-date and secure.

Vulnerable pre-trained models can contain hidden biases, backdoors, or other malicious features that have not been identified through safety evaluations. These models can be created by poisoned datasets or direct model tampering using techniques like ROME.

A compromised pre-trained model can introduce malicious code, causing biased outputs in certain contexts and leading to harmful or manipulated outcomes. This was demonstrated in Scenario #4: Pre-Trained Models.

Unclear T&Cs and data privacy policies of model operators can lead to sensitive data being used for model training and subsequent sensitive information exposure. This may also apply to risks from using copyrighted material by the model supplier.

Data poisoning attacks pose a significant threat to the integrity and reliability of AI and ML systems, and can cause undesirable behavior, biased outputs, or complete model failure.

Licensing Risks

Licensing Risks can be a major headache in AI development. Different software and dataset licenses impose varying legal requirements.

Using open-source licenses can be particularly tricky, as they often come with restrictions on usage, distribution, or commercialization.

For instance, dataset licenses may restrict how you can use the data, making it difficult to integrate it into your AI project.

Vulnerable Pre-Trained

Vulnerable Pre-Trained Models are a significant risk to LLM applications. They can contain hidden biases, backdoors, or other malicious features that have not been identified through safety evaluations of model repositories.

Pre-trained models are binary black boxes, making static inspection offer little to no security assurances. This is a major concern, especially when models are created by poisoned datasets or direct model tampering using techniques such as ROME, also known as lobotomisation.

A compromised model can introduce malicious code, causing biased outputs in certain contexts and leading to harmful or manipulated outcomes. This can happen when an LLM system deploys pre-trained models from a widely used repository without thorough verification.

In addition, models can be created by both poisoned datasets and direct model tampering, making it challenging to identify vulnerabilities. This is a significant threat to the integrity and reliability of AI and ML systems.

Prevention and Mitigation Strategies

Implementing a layered defense strategy is key to mitigating data poisoning attacks. This involves combining security best practices with access control enforcement.

Data validation is crucial to detect and filter out suspicious or malicious data points. Prior to model training, all data should be validated to prevent threat actors from inserting and exploiting such data.

Continuous monitoring and auditing are essential to prevent unauthorized users from accessing AI systems. Apply the principle of least privilege and set logical and physical access controls to mitigate risks associated with unauthorized access.

Adversarial sample training is a vital proactive security defense measure to stop many data poisoning attacks. Introducing adversarial samples during the model's training phase enables the ML model to correctly classify and flag such inputs as inappropriate.

Using multiple data sources can significantly reduce the efficiency of many data poisoning attacks. This is because diverse datasets are generally harder for attackers to manipulate toward a specific outcome.

Here are some key strategies to prevent and mitigate data poisoning attacks:

- Data Validation: Robust data validation and sanitization techniques can detect and remove anomalous or suspicious data points before training.

- Regular Model Auditing: Continuous monitoring and auditing of ML models can help in early detection of performance degradation or unexpected behaviors.

- Diverse Data Sources: Utilizing multiple, diverse sources of data can dilute the effect of poisoned data, making the attack less impactful.

- Robust Learning: Techniques like trimmed mean squared error loss or median-of-means tournaments, which reduce the influence of outliers, can offer some resistance against poisoning attacks.

- Provenance Tracking: Keeping a transparent and traceable record of data sources, modifications, and access patterns can aid in post-hoc analysis in the event of suspected poisoning.

Specific Vulnerabilities

Specific vulnerabilities in AI systems can arise from various sources.

Traditional third-party package vulnerabilities are a significant concern, as outdated or deprecated components can be exploited by attackers to compromise LLM applications.

Vulnerable pre-trained models are another risk, as they can contain hidden biases, backdoors, or other malicious features that have not been identified through safety evaluations.

Vulnerable LoRA adapters can also compromise the integrity and security of pre-trained base models, particularly in collaborative model merge environments.

An attacker can exploit a vulnerable Python library to compromise an LLM app, as seen in the Open AI data breach, where a compromised PyTorch dependency with malware was downloaded in a model development environment.

Deploying pre-trained models from a widely used repository without thorough verification can also lead to malicious code being introduced, causing biased outputs in certain contexts and leading to harmful or manipulated outcomes.

Vulnerable LoRa Adapters

LoRa adapters are a popular fine-tuning technique that enhance modularity by allowing pre-trained layers to be bolted onto an existing LLM.

This method increases efficiency, but it also creates new risks, where a malicious LoRa adapter can compromise the integrity and security of the pre-trained base model.

LoRa adapters can be used in collaborative model merge environments, where multiple models are combined to create a new, more powerful model.

A malicious LoRa adapter can exploit the support for LoRA from popular inference deployment platforms such as vLMM and OpenLLM, where adapters can be downloaded and applied to a deployed model.

These platforms allow users to download and apply LoRa adapters to deployed models, which can put the entire system at risk if the adapter is malicious.

Vulnerable Python Library

In the first Open AI data breach, an attacker exploited a vulnerable Python library to compromise an LLM app.

Attackers can exploit vulnerable Python libraries to compromise LLM apps. This happened in the first Open AI data breach.

An attacker exploited a vulnerable Python library to compromise an LLM app in the first Open AI data breach. A compromised PyTorch dependency with malware was downloaded from the PyPi package registry in a model development environment.

The Shadow Ray attack on the Ray AI framework used by many vendors to manage AI infrastructure is a sophisticated example of this type of attack. Five vulnerabilities are believed to have been exploited in the wild, affecting many servers.

Attackers can trick model developers into downloading compromised dependencies by exploiting vulnerabilities in package registries like PyPi.

Here's an interesting read: Generative Ai Android App

Pre-Trained

Pre-Trained models can be vulnerable to hidden biases, backdoors, or malicious features that haven't been identified through safety evaluations.

A compromised pre-trained model can introduce malicious code, causing biased outputs in certain contexts and leading to harmful or manipulated outcomes.

Pre-trained models can be created by poisoned datasets or direct model tampering using techniques like ROME, also known as lobotomisation.

This can happen even when using popular fine-tuning techniques like LoRA, which can compromise the integrity and security of the pre-trained base model.

A widely used repository of pre-trained models can be a source of compromised models, especially if they're not thoroughly verified before deployment.

Deploying pre-trained models without thorough verification can lead to serious consequences, including biased outputs and manipulated outcomes.

Frequently Asked Questions

How does AI Nightshade work?

AI Nightshade works by making subtle pixel changes to images, tricking AI into misidentifying the subject. These imperceptible alterations can deceive even the most advanced AI systems

What is the difference between model poisoning and data poisoning?

Data poisoning and model poisoning are two types of attacks that compromise machine learning (ML) systems, but they differ in their target: data poisoning targets the training data, while model poisoning targets the ML model itself. Understanding the difference between these attacks is crucial to developing effective defenses against them.

What is an AI-powered attack?

An AI-powered attack uses artificial intelligence and machine learning to automate and enhance cyberattacks, making them more sophisticated and difficult to detect. Learn how AI-powered attacks work and how to protect yourself from these emerging threats

Sources

- https://outshift.cisco.com/blog/ai-data-poisoning-detect-mitigate

- https://www.techtarget.com/searchenterpriseai/definition/data-poisoning-AI-poisoning

- https://www.nightfall.ai/ai-security-101/data-poisoning

- https://www.cobalt.io/blog/data-poisoning-attacks-a-new-attack-vector-within-ai

- https://genai.owasp.org/llmrisk/llm03-training-data-poisoning/

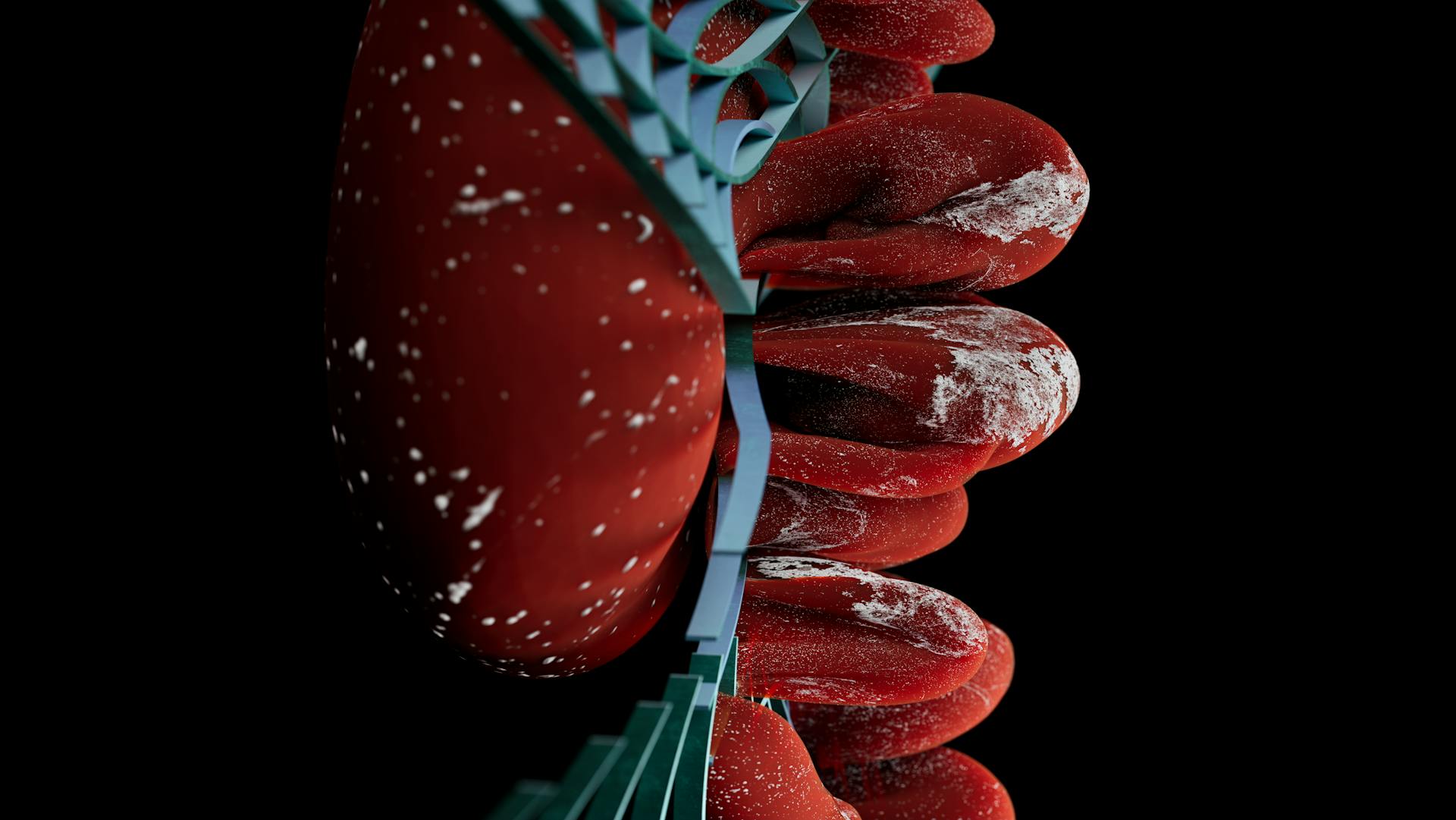

Featured Images: pexels.com