Normalizing flows is a powerful technique for likelihood estimation in deep learning. It allows us to transform a simple distribution into a complex one by composing multiple invertible transformations.

These transformations, called flow steps, can be thought of as a series of layers in a neural network. Each flow step has a specific form, typically consisting of an affine transformation followed by an element-wise operation.

The key insight behind normalizing flows is that the Jacobian determinant of the transformation can be used to adjust the likelihood of the data. This adjustment is crucial for ensuring that the model accurately captures the underlying distribution of the data.

Curious to learn more? Check out: Normalization Data Preprocessing

What is Normalizing Flow

Normalizing flow is a type of generative model that can estimate the likelihood of a given dataset.

It's based on the idea that complex data distributions can be represented as a series of simple transformations, each with a corresponding normalizing flow.

These transformations are learned from the data and can be used to transform a simple distribution into a complex one.

In the context of likelihood estimation, normalizing flows are particularly useful for modeling high-dimensional data.

They work by transforming the data into a lower-dimensional space, where the distribution is easier to model.

This is achieved through a series of invertible transformations, which can be composed together to form a complex flow.

Advantages and Applications

Normalizing flows are a powerful tool for likelihood estimation, and they have several advantages over other types of probabilistic models. One of the key benefits is their flexibility, allowing them to learn a wide variety of probability distributions.

Here are some specific advantages of normalizing flows:

- The normalizing flow models do not need to put noise on the output and thus can have much more powerful local variance models.

- The training process of a flow-based model is very stable compared to GAN training of GANs, which requires careful tuning of hyperparameters of both generators and discriminators.

- Normalizing flows are much easier to converge when compared to GANs and VAEs.

These advantages make normalizing flows a popular choice for likelihood estimation tasks.

Advantages

Normalizing flows have several advantages that make them a popular choice for modeling complex probability distributions.

One of the key benefits is their flexibility, which allows them to learn a wide variety of probability distributions. This flexibility is a major advantage over other types of models.

Normalizing flows are also relatively easy to train, requiring less effort compared to other probabilistic models like variational autoencoders. This ease of training makes them a great option for researchers and practitioners alike.

Another advantage of normalizing flows is their simplicity, making them relatively easy to understand and implement. This simplicity is a result of their mathematical structure, which is often more straightforward than other models.

Here are some of the key advantages of normalizing flows at a glance:

- Flexibility: can be used to learn a wide variety of probability distributions.

- Ease of training: relatively easy to train compared to other types of probabilistic models.

- Simplicity: relatively simple to understand and implement.

Advantages of

Normalizing flows have several advantages over other types of probabilistic models. They offer a flexible way to learn a wide variety of probability distributions.

Normalizing flows are relatively easy to train compared to other types of probabilistic models. They are also more stable during the training process, unlike GANs which require careful tuning of hyperparameters.

One of the key benefits of normalizing flows is that they don't need to put noise on the output, allowing them to have more powerful local variance models. This is a significant advantage over GANs and VAEs.

Here are some of the key advantages of normalizing flows:

- Flexibility: Normalizing flows can be used to learn a wide variety of probability distributions.

- Ease of training: Normalizing flows are relatively easy to train, compared to other types of probabilistic models.

- Simplicity: Normalizing flows are relatively simple to understand and implement.

- Stability: Normalizing flows have a very stable training process compared to GANs.

- Convergence: Normalizing flows are much easier to converge when compared to GANs and VAEs.

How Normalizing Flow Works

Normalizing flows work by transforming a simple, easy-to-sample distribution, such as a Gaussian, into a more complex distribution through a series of invertible transformations.

These transformations are made up of bijective functions, also known as reversible functions, which can be easily inverted. For example, f(x) = x + 2 is a reversible function because for each input, a unique output exists and vice-versa.

The change of variables formula is used to evaluate the densities of a random variable that is a deterministic transformation from another variable. It involves calculating the Jacobian matrix, which represents how a transformation locally expands or contracts space.

The Jacobian matrix is used to ensure that the new density function integrates to 1 over its domain, which is a requirement for a valid probability density function.

Here's a simple breakdown of the change of variables formula:

- The random variables and must be continuous and have the same dimension.

- The Jacobian matrix is calculated as the matrix of partial derivatives of the transformation.

- The determinant of the Jacobian matrix is used to ensure that the new density function integrates to 1.

- If the determinant of the Jacobian matrix is 1, the transformation is volume-preserving, meaning that the transformed distribution will have the same "volume" as the original one.

What Are?

Normalizing flows are a family of probabilistic models that can learn complex probability distributions by transforming a simple, easy-to-sample distribution into a more complex one.

They work by applying a series of invertible transformations to the input distribution, allowing for the creation of complex distributions from simple ones. This is done using a combination of mathematical operations, including the change of variables formula.

The change of variables formula is a mathematical concept that describes how to evaluate the density of a random variable that is a deterministic transformation from another variable. It involves the use of a Jacobian matrix, which is a matrix of partial derivatives that describes how the transformation changes the volume of the distribution.

Normalizing flows are particularly useful for generative modeling, density estimation, and inference tasks, and have been used in a variety of applications, including speech processing and image generation.

Here are some key characteristics of normalizing flows:

- They are invertible, meaning that they can be reversed to obtain the original distribution.

- They are differentiable, making it possible to train them using backpropagation.

- They can be composed together to create more complex distributions.

- They can be used to learn complex probability distributions from data.

Normalizing flows have several advantages over other types of generative models, including:

- They are more flexible and can be used to learn a wide range of probability distributions.

- They are relatively easy to train and can be used for a variety of tasks.

- They can be used to learn complex, multi-modal distributions.

However, normalizing flows also have some limitations, including:

- They can be computationally expensive to train and evaluate.

- They require a large amount of data to learn complex distributions.

- They can be sensitive to the choice of architecture and hyperparameters.

Training Flow Variants

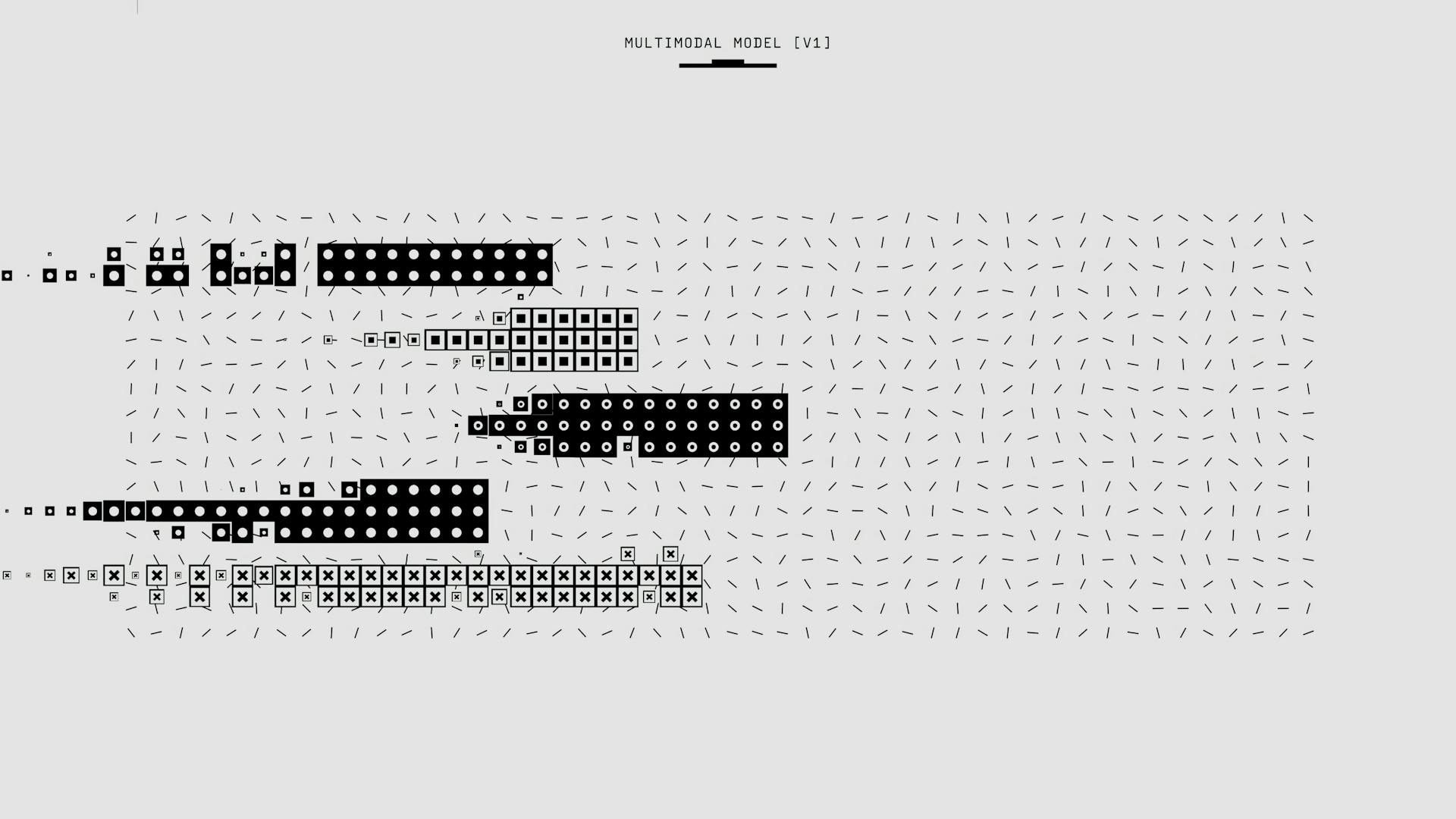

Training flow variants is a crucial step in understanding how normalizing flow works. Pre-trained models are available that contain validation and test performance, as well as runtime information.

These pre-trained models are a great starting point, especially since flow models can be computationally expensive. Training flow models from scratch can be a time-consuming and resource-intensive process.

To get started, rely on the pre-trained models for a first run through the notebook. This will give you a good idea of how the flow models perform without requiring a significant investment of time and resources.

Latent Space Interpolation

Latent space interpolation is a test for the smoothness of a generative model's latent space, where we interpolate between two training examples.

Normalizing flows are strictly invertible, which means any image is represented in the latent space. This allows us to guarantee that interpolation between two images will result in a valid image.

The multi-scale model produces more realistic interpolations, such as the digits 7, 8, and 6. These interpolations form a coherent and recognizable digit.

In contrast, the variational dequantization model focuses on local patterns that do not form a coherent image. This is evident in the example where the model attempts to interpolate between the digits 9 and 6.

Explore further: Flow-based Generative Model

Architecture and Design

In designing a normalizing flow architecture, it's essential to consider the scale of the input data. For the MNIST dataset, we apply the first squeeze operation after two coupling layers, but don't apply a split operation yet.

The reason for this is that we need at least a minimum amount of transformations on each variable, which is why we apply two more coupling layers before finally applying a split flow and squeeze again. This setup is inspired by the original RealNVP architecture, which is shallower than other state-of-the-art architectures.

The last four coupling layers operate on a scale of 7x7x8, and to counteract the increased number of channels, we increase the hidden dimensions for the coupling layers on the squeezed input. We choose hidden dimensionalities of 32, 48, and 64 for the three scales respectively to keep the number of parameters reasonable.

The multi-scale flow has almost 3 times the parameters of the single scale flow, but it's not necessarily more computationally expensive.

Training and Analysis

In the final part of our exploration of normalizing flows, we'll train all the models we've implemented and analyze their performance.

We'll be training all the models we've developed, including those with multi-scale architectures and variational dequantization.

This will give us a chance to see how these different components affect the overall performance of the models.

The models will be trained on various datasets, allowing us to compare their performance in different scenarios.

By analyzing the results, we can gain a deeper understanding of how normalizing flows work and how they can be used for likelihood estimation.

Training the models will also help us identify any potential issues or areas for improvement in the implementation.

A fresh viewpoint: How Generative Ai Will Transform Knowledge Work

Density Estimation and Sampling

Density estimation and sampling are crucial aspects of normalizing flows, and understanding how they work is essential to leveraging the power of these models.

Normalizing flows are designed to model complex, multi-modal distributions, and as such, they can naturally capture the nuances of real-world data. In the context of image modeling, normalizing flows aim to map an input image to an equally sized latent space.

A key metric used to evaluate the performance of normalizing flows is bits per dimension (bpd). This metric is motivated from an information theory perspective and describes how many bits we would need to encode a particular example in our modeled distribution.

The following table shows a comparison of different models on their quantitative results:

The results show that using variational dequantization improves upon standard dequantization in terms of bits per dimension, although it takes longer to evaluate the probability of an image. In contrast, the multi-scale architecture shows a notable improvement in sampling time despite having more parameters.

The samples from the multi-scale model demonstrate a clear difference from the simple model, showcasing the ability of the multi-scale model to learn full, global relations that form digits. This is a significant benefit of the multi-scale model, as it can naturally model complex, multi-modal distributions while avoiding the independent decoder output noise present in VAEs.

Sources

- https://medium.com/@tejpal.abhyuday/deep-learning-part-6-generative-modelling-through-normalizing-flows-c79fffc90091

- https://deepgenerativemodels.github.io/notes/flow/

- https://uvadlc-notebooks.readthedocs.io/en/latest/tutorial_notebooks/tutorial11/NF_image_modeling.html

- https://grishmaprs.medium.com/normalizing-flows-5b5a713e45e2

- https://towardsdatascience.com/introduction-to-normalizing-flows-d002af262a4b

Featured Images: pexels.com