Human in the loop reinforcement learning is a game-changer for complex decision-making tasks. It combines the power of artificial intelligence with human expertise to achieve better results.

By incorporating human feedback and oversight, human in the loop reinforcement learning can improve the accuracy and reliability of AI systems. This is especially important in high-stakes applications where mistakes can have serious consequences.

One of the key benefits of human in the loop reinforcement learning is its ability to adapt to changing environments. By learning from human feedback, AI systems can adjust their behavior to better suit the situation at hand.

Collecting and Preparing Data

Compensating individuals to produce responses to prompts is a necessary step in collecting supplementary data for the language model.

This process can incur expenses and consume time, but it's pivotal for orienting the model towards human-like preferences.

A smaller dataset is used in the training of the reward model, composed of pairs consisting of prompts and corresponding rewards.

Each prompt is linked to an anticipated output, accompanied by rewards that signify desirability for that output.

This dataset plays a crucial role in steering the model toward generating content that resonates with users.

Recommended read: Random Shuffle Dataset Python Huggingface

Data Compilation

Data compilation is a crucial step in the training process, where a specialized dataset is assembled to help the model learn desirable outputs.

This dataset is separate from the initial training dataset, which is generally larger. It's composed of pairs of prompts and corresponding rewards that signify desirability for that output.

Each prompt is linked to an anticipated output, which is a key aspect of this dataset. This helps the model understand what constitutes a good output and what doesn't.

The dataset is smaller than the initial training dataset, but it plays a vital role in steering the model toward generating content that resonates with users.

Selecting a Base Language

Selecting a Base Language is a critical task that sets the stage for the rest of your project. The choice of model is not standardized, but rather depends on the specific task, available resources, and unique complexities of the problem at hand.

Industry approaches differ significantly, with OpenAI adopting a smaller iteration of GPT-3 called InstructGPT, while Anthropic and DeepMind explore models with parameter counts ranging from 10 million to 280 billion.

The initial phase of selecting a base language model involves a lot of decision-making. You'll need to weigh the pros and cons of each model to determine which one best suits your needs.

Reinforcement Learning Overview

Reinforcement learning is a powerful technique used in human-in-the-loop (HITL) to train AI models. It relies on automatically generated feedback from the AI itself, which is a key difference from human-in-the-loop.

RLHF, a specific form of HITL, involves training a model based on human feedback using reinforcement learning techniques. This approach learns from rewards or penalties assigned by humans to the quality of the model's outputs.

To train a GPT model using RLHF, the process typically involves pretraining, reward modeling, and reinforcement learning. Human evaluators provide feedback on the model's outputs, which is used to train a reward model that predicts the reward a human would give to any given output.

RLHF is particularly important for applications where the quality and appropriateness of the model's outputs are critical, such as content moderation, customer service, and creative writing. This approach can lead to more aligned models that generate safe, relevant, and coherent responses.

See what others are reading: Human-in-the-loop

Here are the key characteristics of RLHF:

- Definition: RLHF is a specific form of HITL where reinforcement learning techniques are used to train the model based on human feedback.

- Application: RLHF might involve pretraining, reward modeling, and reinforcement learning to fine-tune the model.

RLAIF, another approach, relies on training a preference model using automatically generated AI feedback. This is notably used in Anthropic's constitutional AI, where the AI feedback is based on conformance to the principles of a constitution.

Training and Operations

The RLHF training process involves three stages: Initial Phase, Human Feedback, and Reinforcement Learning. In the Initial Phase, a pre-trained model is used as a benchmark for accurate behavior.

The primary model is designated to establish a baseline for performance, and utilizing a pre-trained model proves efficient due to extensive data requirements for training.

Human feedback is collected in the Human Feedback stage, where testers evaluate the primary model's performance and assign quality or accuracy ratings to different outputs generated by the model. This feedback is used to generate rewards for reinforcement learning.

The reward model is fine-tuned using outputs from the primary model, and it receives quality scores from testers. The primary model uses this feedback to enhance its performance for subsequent tasks.

This process is iterative, with human feedback collected repeatedly and reinforcement learning refining the model continuously, improving its capabilities.

The RLHF training process is connected to the fundamental component: the initial pretraining of a Language Model (LM).

Limitations and Challenges

Human in the loop reinforcement learning is a powerful tool, but it's not without its limitations and challenges. Feedback quality can differ among users and evaluators, leading to variability and human mistakes.

One of the biggest difficulties with RLHF is collecting high-quality human feedback. Experts in specific domains like science or medicine should contribute feedback for tackling intricate inquiries, but locating these experts can be costly and time-intensive.

The wording of questions is also crucial, as the accuracy of answers hinges on it. Even with substantial RLHF training, an AI agent struggles to grasp user intent without adequately trained phrasing.

RLHF is susceptible to machine learning biases, which can lead to inaccurate responses. AI tends to favor its training-based answer, introducing bias by overlooking alternative responses.

There's also a risk of overfitting, where the model memorizes specific feedback examples instead of learning to generalize. This can happen when feedback predominantly comes from a specific demographic, leading the model to learn peculiarities or noise.

The effectiveness of RLHF depends on the quality of human feedback, and there's a risk of the model learning to manipulate the feedback process or game the system to achieve higher rewards rather than genuinely improving its performance.

Here are some of the challenges associated with RLHF:

- Variability and human mistakes: Feedback quality can differ among users and evaluators.

- Question phrasing: The accuracy of answers hinges on the wording of questions.

- Bias in training: RLHF is susceptible to machine learning biases.

- Scalability: RLHF involves human input, making the process time-intensive.

Scalability is a major issue, as adapting RLHF to train larger, more advanced models requires significant time and resources due to its dependency on human feedback. This can potentially be alleviated by devising methods to automate or semi-automate the feedback loop.

Applications and Usage

Human-in-the-loop reinforcement learning (RLHF) has been applied to various domains of natural language processing (NLP), such as conversational agents and text summarization.

RLHF is particularly useful for tasks that involve human values or preferences, as it allows for the capture of these preferences in the reward model. This results in a model capable of generating more relevant responses and rejecting inappropriate or irrelevant queries.

RLHF has been used to train language models like OpenAI's ChatGPT and DeepMind's Sparrow, which are able to provide answers that align with human preferences.

In computer vision, RLHF has been used to align text-to-image models, with studies noting that the use of KL regularization helped to stabilize the training process and reduce overfitting to the reward model.

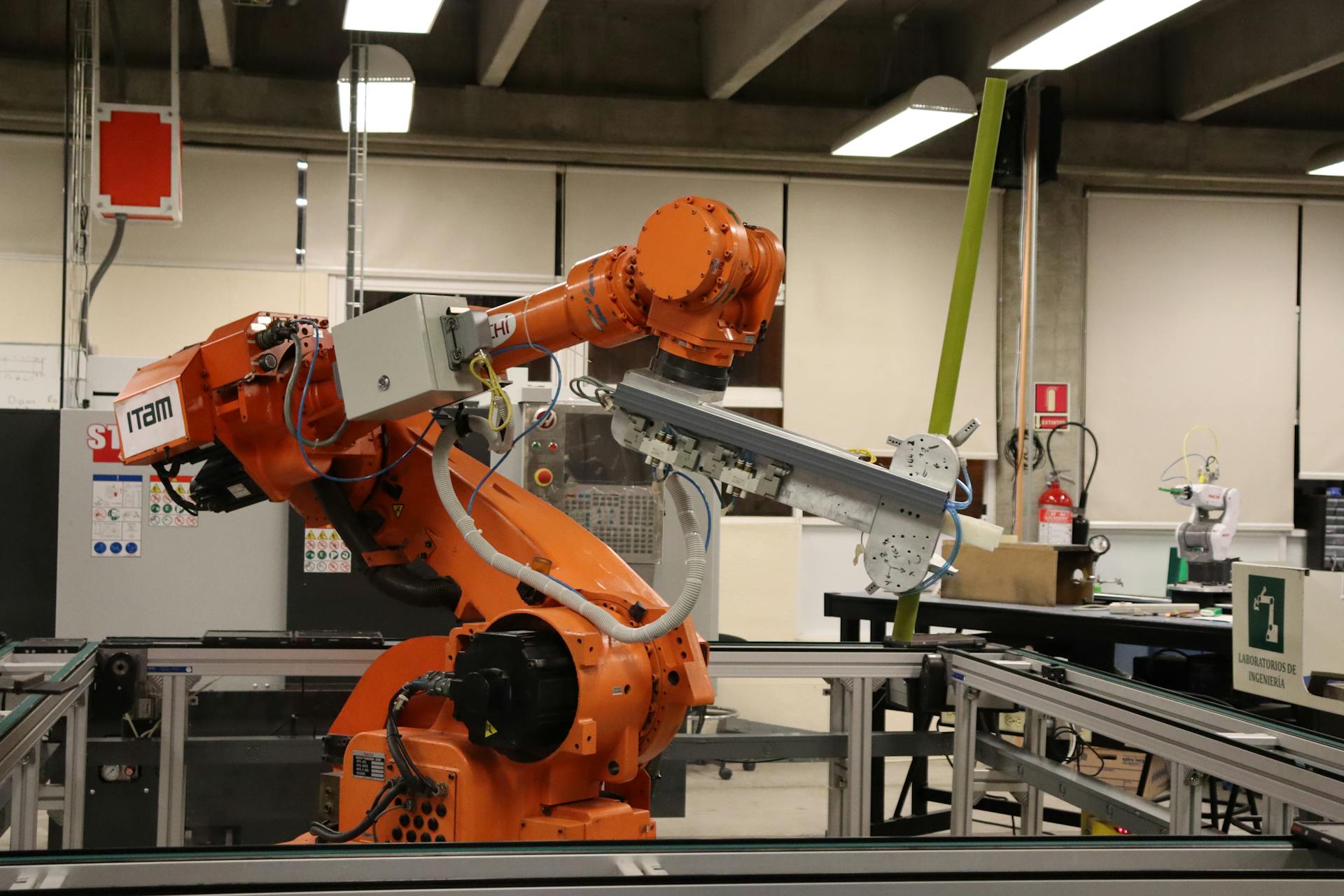

RLHF has also been applied to other areas, such as the development of video game bots and tasks in simulated robotics, where it can teach agents to perform at a competitive level without ever having access to their score.

Human-in-the-loop is basically integrated through two machine learning algorithm processes — supervised and unsupervised learning. In supervised machine learning, labelled or annotated data sets are used by ML experts to train the algorithms so that they can make the right predictions when used in real-life.

RLHF can sometimes lead to superior performance over RL with score metrics because the human's preferences can contain more useful information than performance-based metrics.

In HITL, humans label the training data for the algorithm which is later fed into the algorithms to make the various scenarios understandable to machines.

Recommended read: Applications of Supervised Learning

Techniques and Optimization

Fine-tuning plays a vital role in Reinforcement Learning with the Human Feedback approach, enabling the language model to refine its responses according to user inputs.

Reinforcement learning methods, such as Kullback-Leibler (KL) divergence and Proximal Policy Optimization (PPO), are employed to refine the model.

PPO's strength in maintaining a balance between exploration and exploitation during training is particularly advantageous for the RLHF fine-tuning phase.

This equilibrium is vital for RLHF agents, enabling them to learn from both human feedback and trial-and-error exploration.

The integration of PPO accelerates learning and enhances robustness.

Consider reading: Tuning Hyperparameters

Techniques to Fine-Tune

Reinforcement Learning with Human Feedback is a powerful approach to fine-tuning language models. It enables the model to refine its responses according to user inputs.

Fine-tuning plays a vital role in this approach, and it employs reinforcement learning methods, incorporating techniques like Kullback-Leibler (KL) divergence and Proximal Policy Optimization (PPO).

Proximal Policy Optimization (PPO) is a widely recognized reinforcement learning algorithm that's effective in optimizing policies within intricate environments. Its strength in maintaining a balance between exploration and exploitation during training is particularly advantageous for the RLHF fine-tuning phase.

Here's an interesting read: Fine Tune Ai

This equilibrium is vital for RLHF agents, enabling them to learn from both human feedback and trial-and-error exploration. The integration of PPO accelerates learning and enhances robustness.

Direct Preference Optimization (DPO) is another alternative to RLHF that directly adjusts the main model according to people's preferences. It uses a change of variables to define the "preference loss" directly as a function of the policy and uses this loss to fine-tune the model.

A fresh viewpoint: Preference Learning

AI vs Deep Learning

AI and Deep Learning are often used interchangeably, but they're not the same thing.

AI, or Artificial Intelligence, refers to the broader field of developing machines that can perform tasks without human intervention. Developing such machines is not possible without human help, which is why Human-in-the-Loop or HITL is a model that requires human interaction.

Deep Learning is a type of Machine Learning that involves training artificial neural networks with multiple layers to learn complex patterns in data.

Intriguing read: Glossary of Artificial Intelligence

Reward Through Training

The reward model is a crucial component of the RLHF procedure, serving as a mechanism for alignment and infusing human preferences into the AI's learning trajectory.

This model is trained to associate input text sequences with a numerical reward value, progressively facilitating reinforcement learning algorithms to enhance their performance in various settings.

The reward model can be either an integrated language or a modular structure, and its fundamental role is to ascertain which output better corresponds to human preferences.

By assigning a numeric reward value, the reward model can gauge the quality of responses generated by the RL policy and the initial language model.

The reward model will acknowledge the more suitable outcome, essentially choosing between two distinct text outputs generated by the AI.

In the RLHF training process, the reward model is applied to assess both the RL policy's output and the initial LM's output, and it assigns a numeric reward value to gauge their quality.

This process helps to refine the language model and the RL policy, ensuring that the AI generates high-quality responses that align with human preferences.

Frequently Asked Questions

What is the difference between RL and RLHF?

RLHF builds upon traditional RL by incorporating human feedback to guide the learning process, whereas traditional RL relies solely on rewards to train models. This human touch enables RLHF to optimize models more efficiently and accurately.

What is the difference between human in the loop and human out of the loop?

Human-in-the-Loop (HITL) systems require human input, while Human-out-of-the-Loop (HOOL) systems operate independently without human intervention

Sources

- https://en.wikipedia.org/wiki/Reinforcement_learning_from_human_feedback

- https://www.labellerr.com/blog/reinforcement-learning-from-human-feedback/

- https://levity.ai/blog/human-in-the-loop

- https://medium.com/vsinghbisen/what-is-human-in-the-loop-machine-learning-why-how-used-in-ai-60c7b44eb2c0

- https://www.linkedin.com/pulse/what-human-in-the-loop-reinforcement-learning-human-feedback-nath-yhofc

Featured Images: pexels.com