Learn to Rank is a fundamental concept in search engine optimization (SEO) that involves ranking search engine results pages (SERPs) based on relevance and authority.

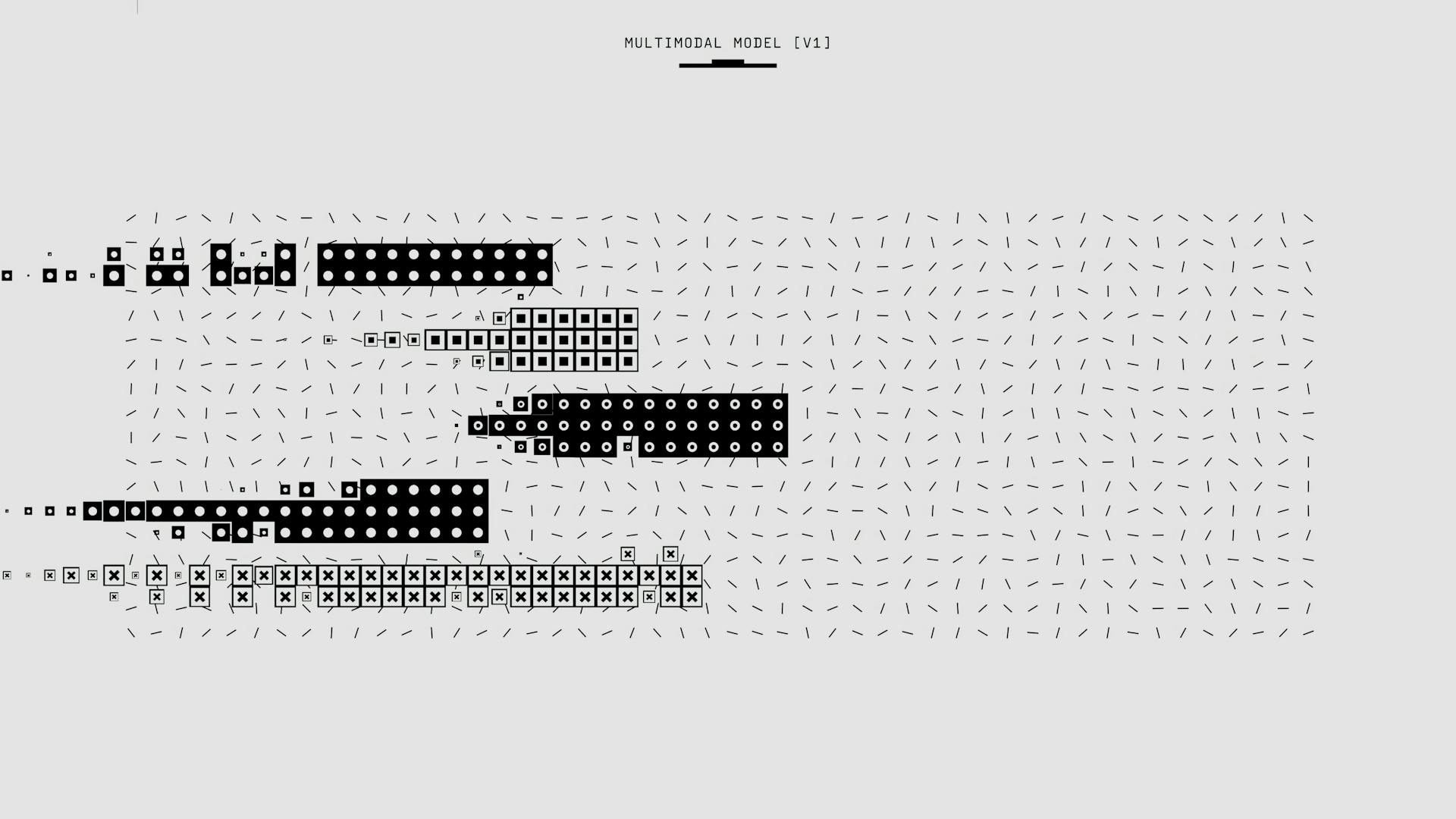

At its core, Learn to Rank is a machine learning model that uses a ranking function to determine the order of search results. This function takes into account various factors such as relevance, authority, and user behavior.

The ranking function is trained on a dataset of search queries and their corresponding results to learn the patterns and relationships between them. This training process enables the model to accurately rank search results based on their relevance and authority.

A key aspect of Learn to Rank is its ability to adapt to changing search patterns and user behavior, ensuring that search results remain relevant and accurate over time.

Suggestion: Energy Based Model

Training Methods

Training a ranking model can be done in several ways, but the most straightforward approach is using the scikit-learn estimator interface.

To train a simple ranking model, you can use the xgboost.XGBRanker class without tuning. However, keep in mind that this class doesn't fully conform to the scikit-learn estimator guideline, so you may encounter some limitations when using utility functions.

One of the key requirements for training a ranking model is to have an additional sorted array called qid, which specifies the query group of input samples. This array is crucial for the model to understand the relationships between different samples.

In practice, you'll need to add a column qid to your data frame X, either using pandas or cuDF. This column is essential for the xgboost.XGBRanker.score() method to work correctly.

The simplest way to train a ranking model is by using the rank:ndcg objective, which is derived from the ndcg metric. This objective is suitable for pairwise ranking models like LambdaMART.

Here's a summary of the training methods:

Loss and Evaluation

XGBoost implements different LambdaMART objectives based on different metrics, including normalized discounted cumulative gain (NDCG) and pairwise loss.

Explore further: Is Transfer Learning Different than Deep Learning

NDCG can be used with both binary relevance and multi-level relevance, making it a good default choice if you're unsure about your data.

The rank:pairwise loss is the original version of the pairwise loss, also known as the RankNet loss or the pairwise logistic loss, and no scaling is applied.

Whether scaling with a learning to rank (LTR) metric is actually more effective is still up for debate, but it provides a theoretical foundation for general lambda loss functions.

Related reading: What Is Low Rank Adaptation

Distributed Training

Distributed Training is a powerful feature in XGBoost that allows for faster training times on large datasets.

XGBoost implements distributed learning-to-rank with integration of multiple frameworks including Dask, Spark, and PySpark.

The interface is similar to the single-node counterpart, making it easy to transition to distributed training.

Scattering a query group onto multiple workers is theoretically sound but can affect the model accuracy, although this discrepancy is usually not an issue for most use cases.

As long as each data partition is correctly sorted by query IDs, XGBoost can aggregate sample gradients accordingly.

Check this out: Distributed Training Huggingface

Assignment Overview

The goal of this assignment is to gain hands-on experience with machine learning algorithms and feature-based retrieval models.

To accomplish this, you'll be adding learning-to-rank capabilities to the QryEval search engine. This involves developing several custom features for learning-to-rank.

You'll also conduct experiments with your search engine and write a report about your work. Finally, you'll upload your work to the course website for grading.

Here are the specific tasks involved in the assignment:

- Add learning-to-rank capabilities to the QryEval search engine.

- Develop several custom features for learning-to-rank.

- Conduct experiments with your search engine.

- Write a report about your work.

- Upload your work to the course website for grading.

Constructing Pairs

Constructing Pairs is a crucial step in the learning-to-rank process, and XGBoost offers two strategies for doing so: the mean method and the topk method. These methods determine how pairs of documents are constructed for gradient calculation.

The mean strategy samples pairs for each document in a query list. For example, if you have a list of 3 documents and set lambdarank_num_pair_per_sample to 2, XGBoost will randomly sample 6 pairs.

The topk method constructs about k times the number of queries pairs, with k equal to lambdarank_num_pair. This means if you have a list of 3 queries and set lambdarank_num_pair to 2, XGBoost will construct approximately 6 pairs.

The number of pairs counted here is an approximation since we skip pairs that have the same label. This is an important consideration when choosing between the mean and topk methods.

Readers also liked: Demonstration Learning Method

Assignment Overview

The purpose of this assignment is to gain experience with using machine learning algorithms to train feature-based retrieval models. You'll be working with a search engine called QryEval, which is going to be a great opportunity to learn and experiment.

To start, you'll need to add learning-to-rank capabilities to the QryEval search engine. This will involve developing several custom features for learning-to-rank, which will help you understand how to improve the search engine's performance.

Here's a breakdown of the tasks you'll need to complete:

- Add learning-to-rank capabilities to the QryEval search engine.

- Develop several custom features for learning-to-rank.

- Conduct experiments with your search engine.

- Write a report about your work.

- Upload your work to the course website for grading.

This assignment is designed to help you gain hands-on experience with machine learning algorithms and feature-based retrieval models. By the end of it, you'll have a better understanding of how to improve search engine performance and develop custom features for learning-to-rank.

Search Engine Architecture

The QryEval search engine supports a reranking architecture, which is a crucial component of the learn to rank approach. This architecture involves developing a learning-to-rank (LTR) reranker.

Additional reading: Mlops Architecture

To implement the LTR reranker, you'll need to support several capabilities. These include training a model, creating feature vectors for the top n documents in an initial ranking, using the trained model and feature vectors to calculate new document scores, and reranking the top n documents.

The LTR reranker needs to be able to train a model, which is most conveniently done when the RerankerXxx object is initialized, before any queries are reranked. You'll also need to create feature vectors for the top n documents in an initial ranking, which can be created by BM25 or read from a file.

The reranker should use the trained model and feature vectors to calculate new document scores, and then use these scores to rerank the top n documents from the initial ranking. The initial ranking may not contain n documents, so the reranker should be able to handle this variability.

Finally, the reranker needs to write the ranking to a .teIn file. The reranking depth should be set to 100.

Here are the key capabilities that the LTR reranker should support:

- Train a model when the RerankerXxx object is initialized

- Create feature vectors for the top n documents in an initial ranking

- Calculate new document scores using the trained model and feature vectors

- Rerank the top n documents from the initial ranking

- Write the ranking to a .teIn file

Features and Models

Features and Models are the backbone of Learn to Rank (LTR). Your program must implement the following features, which are derived directly from document properties, computed directly from the query, or used to provide information about the document in the context of the query.

Document features include the spam score, url depth, and PageRank score, which are stored in the index as attributes. You can extract these features using templated queries in Elasticsearch, such as the one shown in Example 3.

Query features include the number of words in the query, while query-document features include the BM25 score for the title field. You can experiment with different combinations of these features to find a small set of features that delivers accurate results. Try to investigate the effectiveness of different groups of features, and discard any that do not improve accuracy.

The LTR space is evolving rapidly, and many approaches and model types are being experimented with. In practice, Elasticsearch relies specifically on gradient boosted decision tree (GBDT) models for LTR inference, such as LambdaMART, which provides strong ranking performance with low inference latencies.

Expand your knowledge: Solomonoff's Theory of Inductive Inference

Here's a summary of the three main categories of features:

- Document features: spam score, url depth, PageRank score

- Query features: number of words in the query

- Query-document features: BM25 score for the title field

Note that Elasticsearch supports model inference but the training process itself must happen outside of Elasticsearch, using a GBDT model.

Models

At the heart of Learning to Rank (LTR) is a machine learning (ML) model. A model is trained using training data in combination with an objective, which is to rank result documents in an optimal way.

The LTR space is evolving rapidly, with many approaches and model types being experimented with. Elasticsearch relies specifically on gradient boosted decision tree (GBDT) models for LTR inference.

Note that Elasticsearch supports model inference, but the training process must happen outside of Elasticsearch, using a GBDT model.

Features

Features are the building blocks of any search engine or information retrieval system. They are the signals that determine how well a document matches a user's query or interest, and they can vary significantly depending on the context.

You have considerable discretion about what features you develop, but there needs to be a good reason why your feature might be expected to make a difference. Your features are hypotheses about what information improves search accuracy.

There are three main categories of features: document features, query features, and query-document features. Document features are derived directly from document properties, such as product price in an eCommerce store. Query features are computed directly from the query submitted by the user, such as the number of words in the query.

Query-document features provide information about the document in the context of the query. These features are used to provide information about the document's relevance to the query. For example, the BM25 score for the title field is a query-document feature.

Some common relevance features used across different domains include text relevance scores (e.g., BM25, TF-IDF), document properties (e.g., price of a product, publication date), and popularity metrics (e.g., click-through rate, views).

Here are some examples of features that can be extracted from the judgment list:

- Document features: product price, publication date

- Query features: number of words in the query

- Query-document features: BM25 score for the title field

These features can be used to train a model that combines feature values into a ranking score, and ultimately defines your search relevance.

Machine Learning and Toolkits

Machine learning toolkits are essential for learn to rank tasks, and we're using two popular ones in this assignment. SVM is a C++ software application that comes in two parts: svm_rank_learn and svm_rank_classify, with binaries available for Mac, Linux, and Windows, or you can compile your own version using the source code.

These toolkits read and write data from files, using the same file formats for both algorithms. SVM uses the .param file to indicate where the executables are stored.

The RankLib toolkit provides several pairwise and listwise algorithms for training models, and it's included in the lucene-8.1.1 directory that you downloaded for HW1.

If this caught your attention, see: Supervised Learning Machine Learning Algorithms

Machine Learning Toolkits

Machine Learning Toolkits are a crucial part of any machine learning project.

The two machine learning toolkits we'll be using in this assignment are SVM and RankLib.

SVM consists of two C++ software applications: svm_rank_learn and svm_rank_classify.

These executables can be run on Mac, Linux, or Windows, or you can compile your own version using the source code.

RankLib provides several pairwise and listwise algorithms for training models.

The Ranklib .jar file is included in the lucene-8.1.1 directory that you downloaded for HW1.

Both SVM and RankLib use the same file formats to read and write data.

These file formats allow you to train models and calculate document scores.

Here are the two machine learning toolkits we'll be using, along with their file formats:

Relevance Assessments

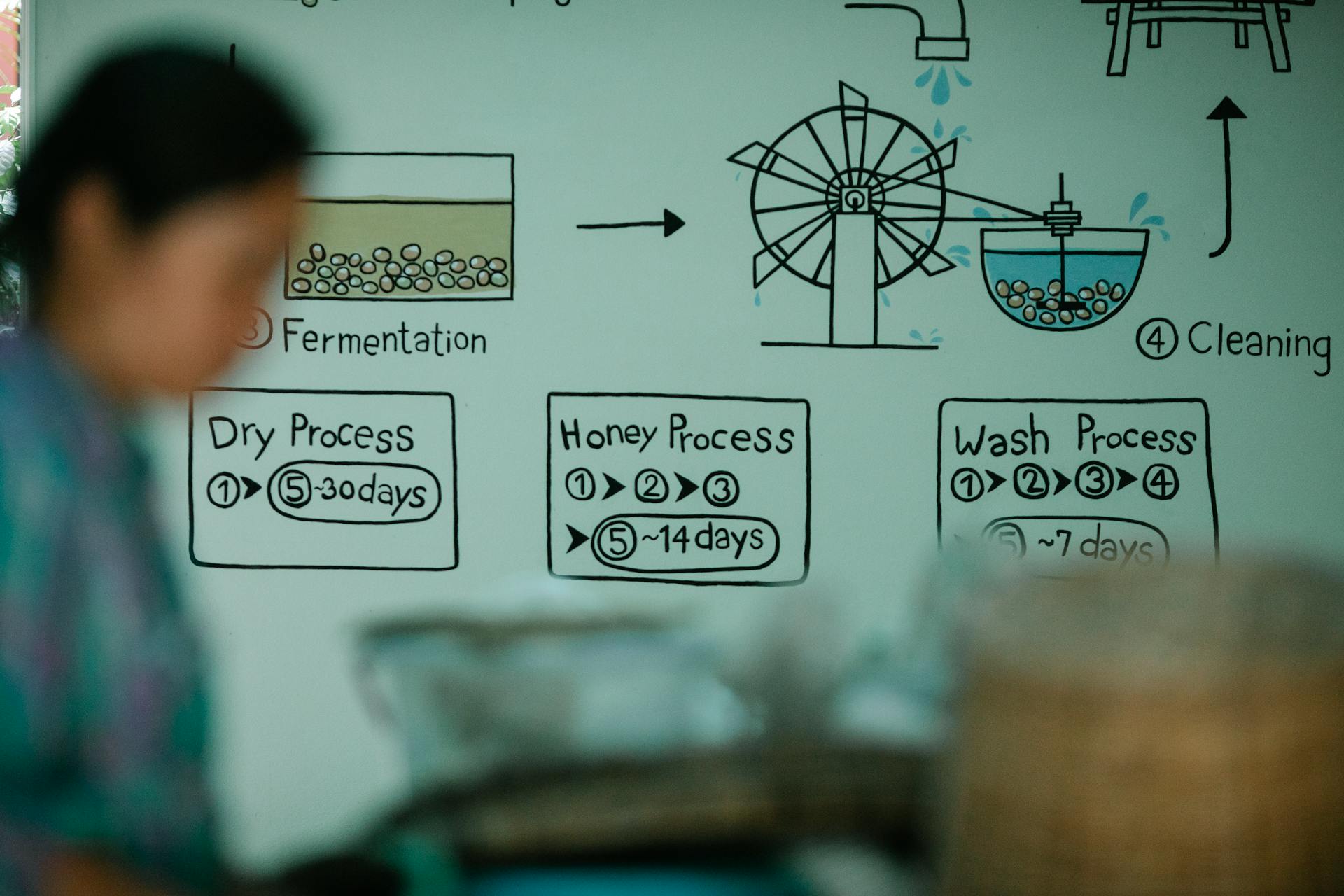

Relevance assessments are a crucial part of the learn to rank process. They help generate training data by identifying a file of relevance assessments, which can be used to evaluate queries on a two-point scale.

The relevance assessments were produced by different years of the TREC conference, providing a diverse set of data to train the model. This data is used to create a judgment list, which is a set of queries and documents with a relevance grade.

A judgment list can be human or machine generated, and it's commonly populated from behavioral analytics with human moderation. The goal of LTR is to fit the model to the judgment list rankings as closely as possible for new queries and documents.

Here are some key considerations when building a judgment list:

- Balance the number of positive and negative examples to help the model learn to distinguish between relevant and irrelevant content.

- Maintain a balanced number of examples for each query type to prevent overfitting and allow the model to generalize effectively.

Judgment Lists

A judgment list is a set of queries and documents with a relevance grade, typically used to train a Learning-to-Rank (LTR) model. It's a crucial component of relevance assessments, as it determines the ideal ordering of results for a given search query.

The judgment list can be human or machine generated, often populated from behavioral analytics with human moderation. Judgment lists are commonly used to train LTR models, which aim to fit the model to the judgment list rankings as closely as possible for new queries and documents.

The quality of your judgment list greatly influences the overall performance of the LTR model. A balanced number of examples for each query type is essential to prevent overfitting and allow the model to generalize effectively across all query types.

Here are some key aspects to consider when building your judgment list:

- Maintain a balanced number of examples for each query type.

- Balance the number of positive and negative examples to help the model learn to distinguish between relevant and irrelevant content more accurately.

A judgment list can be created manually by humans, but techniques are available to leverage user engagement data, such as clicks or conversions, to construct judgment lists automatically. This can be a time-saving and efficient way to build a judgment list.

What Are the Limitations of?

The effectiveness of any relevance assessment model is heavily reliant on the quality and quantity of the training data. In other words, if the data is poor, the model will likely produce poor results.

Learning-to-Rank models, in particular, can be complex to design and fine-tune, requiring expertise in machine learning and the specific application domain. This can be a barrier for those who don't have the necessary skills.

In dynamic environments where data patterns change rapidly, models may require frequent updates to maintain accuracy. This can be a challenge for organizations with limited resources or expertise.

Biases present in the training data can be perpetuated or even amplified in the model's rankings, leading to biased outcomes. This is a significant concern for relevance assessments that aim to be fair and unbiased.

Frequently Asked Questions

What is learning-to-rank model?

A learning-to-rank model is a type of machine learning model that helps information retrieval systems rank search results in order of relevance. It uses algorithms to learn from data and improve the accuracy of ranked search results.

What is the difference between learning-to-rank and recommendation system?

Learning-to-rank (LTR) differs from traditional recommendation systems by focusing on ranking items rather than predicting ratings, producing ordered lists of items. This approach enables more accurate and relevant item ordering, making it ideal for applications where ranking is crucial

What is the learned ranking function?

The Learned Ranking Function (LRF) is a system that generates personalized recommendations by directly optimizing for long-term user satisfaction. It uses short-term user behavior predictions to create a slate of tailored suggestions.

What is rank in deep learning?

In deep learning, ranking refers to a technique where items are directly ranked by training a model to predict the relative score of one item over another, with higher scores indicating higher ranks. This approach enables the model to learn the underlying relationships between items and assign a meaningful ranking to each one.

What is the best algorithm for ranking?

The best algorithm for ranking is ranking by probability, which provides the most accurate results by considering the uncertainty of the data. This algorithm is ideal for applications where accuracy is crucial.

Sources

- https://xgboost.readthedocs.io/en/latest/tutorials/learning_to_rank.html

- https://boston.lti.cs.cmu.edu/classes/11-642/HW/HW3/

- https://www.elastic.co/guide/en/elasticsearch/reference/current/learning-to-rank.html

- https://www.elastic.co/search-labs/blog/elasticsearch-learning-to-rank-introduction

- https://klu.ai/glossary/learning-to-rank

Featured Images: pexels.com