A plot confusion matrix is a crucial tool for evaluating the performance of a machine learning model, particularly in classification problems. It helps you understand how well your model is doing in terms of correctly identifying classes.

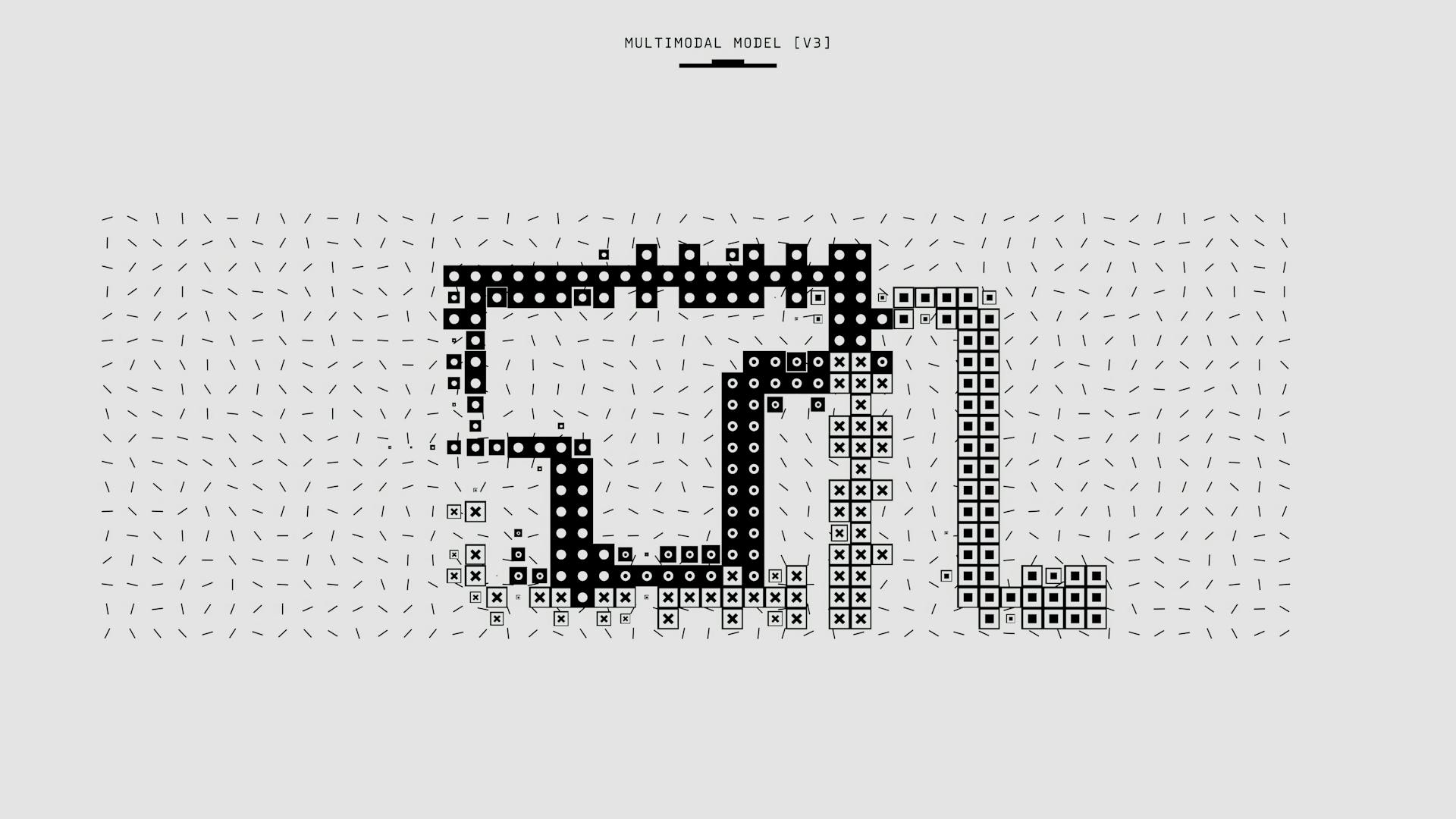

The confusion matrix is a table that displays the number of true positives, true negatives, false positives, and false negatives. This matrix is used to calculate various metrics such as accuracy, precision, recall, and F1-score. These metrics provide a more detailed understanding of your model's performance.

To create a plot confusion matrix, you need to have a classification problem with at least two classes. The matrix will display the number of instances that were correctly classified versus those that were misclassified. This visual representation helps you identify areas where your model needs improvement.

A unique perspective: Light Automl Plot

Types of Classification

In machine learning, classification problems can be categorized into different types based on the number of classes they involve.

A multi-class classification problem involves predicting one of multiple classes, such as whether a person loves Facebook, Instagram, or Snapchat.

The true positive, true negative, false positive, and false negative for each class are calculated by adding the cell values in a confusion matrix.

A 3 x 3 confusion matrix is used to represent a multi-class classification problem, with one row and column for each class.

Confusion matrices are useful for evaluating the performance of a classification model, but they can be complex to decipher without a clear understanding of their structure.

The true positive, true negative, false positive, and false negative for each class are calculated by adding the cell values in a confusion matrix.

Take a look at this: Credit Assignment Problem

Plotting and Results

A Confusion Matrix is created with four quadrants: True Negative, False Positive, False Negative, and True Positive. The quadrants are arranged in a grid to help visualize the accuracy of the model.

In the Confusion Matrix, True means the values were accurately predicted, while False indicates an error or wrong prediction. This distinction is crucial for understanding the model's performance.

To quantify the quality of the model, we can calculate different measures based on the Confusion Matrix.

Plotting with Classes

You can add class names to a ConfusionMatrix plot using the label_encoder argument. This argument can be a sklearn.preprocessing.LabelEncoder or a dict with the encoding-to-string mapping.

The class labels observed while fitting can be used to support the confusion matrix. You can use an array or series of target or class values for this purpose.

To add class names to the plot, you can use a dict with the encoding-to-string mapping. For example, a dict with the encoding-to-string mapping can be used to map class labels to their corresponding class names.

Here's an example of how to add class names to the plot using a dict with the encoding-to-string mapping:

This will add the class names to the plot, making it easier to interpret the results.

Quick Method

The quick method is a useful tool for achieving the same functionality as the previous example, but with a more streamlined approach. This method is called confusion_matrix.

Intriguing read: Bootstrap Method Machine Learning

It builds the ConfusionMatrix object with the associated arguments, fit it, then (optionally) immediately show it. This can be seen in the example where a LogisticRegression struggles to effectively model the credit dataset.

The quick method is particularly useful for examining the performance of models, like the one that struggled to model the credit dataset. It's also great for checking for multicollinearity, as hinted in the example.

Recommended read: Random Shuffle Dataset Python Huggingface

Metrics and Formulas

The confusion matrix is a powerful tool for evaluating the performance of your classification model. It provides various metrics that help you understand how well your model is doing.

Accuracy is one of the most common metrics, measuring the proportion of correctly classified instances out of the total number of instances. Precision is another important metric, indicating the proportion of true positives among all predicted positive instances.

Sensitivity, also known as Recall, measures the proportion of actual positives that were correctly identified by the model. Specificity measures the proportion of actual negatives that were correctly identified as such.

Expand your knowledge: Confusion Matrix in Ai

Precision

Precision is a measure of how accurate your model is when it predicts a positive outcome. It's calculated as the number of true positives divided by the sum of true positives and false positives.

In simpler terms, precision is the percentage of positives that are actually true. This means that if your model predicts a positive outcome, precision tells you what percentage of those predictions are correct.

The formula for precision is True Positive / (True Positive + False Positive). This helps you understand how well your model is doing in terms of predicting the correct positives.

F-Score

The F-score is a useful metric for evaluating classification models, especially when dealing with imbalanced datasets. It's a harmonic mean of precision and recall.

The F-score considers both false positive and false negative cases, making it a good choice for datasets where one type of error is more costly than the other. For example, in medical diagnosis, missing a positive case (false negative) can be more serious than raising a false alarm (false positive).

For more insights, see: Inception Score

The F-score is calculated using the formula 2 * ((Precision * Sensitivity) / (Precision + Sensitivity)). This score doesn't take into account the true negative values, which can be a limitation in certain scenarios.

The F1-score, a variation of the F-score, captures the trends in precision and recall in a single value. It's a good choice when you want to balance the two metrics, but be aware that its interpretability is poor. This means you won't know what your classifier is maximizing – precision or recall.

A unique perspective: Sensitivity Confusion Matrix

Important Concepts

A confusion matrix is a performance evaluation tool in machine learning, representing the accuracy of a classification model. It displays the number of true positives, true negatives, false positives, and false negatives.

True Positive (TP) is when the predicted value matches the actual value, or the predicted class matches the actual class, and the actual value is positive. This is also known as a correct prediction.

True Negative (TN) is when the predicted value matches the actual value, or the predicted class matches the actual class, and the actual value is negative. This is another correct prediction.

False Positive (FP) occurs when the predicted value is falsely predicted, the actual value is negative, but the model predicted a positive value. This is also known as a Type I error.

False Negative (FN) occurs when the predicted value is falsely predicted, the actual value is positive, but the model predicted a negative value. This is also known as a Type II error.

Here's a summary of the different values in a confusion matrix:

Multiclass

Multiclass tasks are all about predicting one of several classes, and the confusion matrix is a crucial tool for evaluating their performance.

The confusion matrix for multiclass tasks is a NxN matrix, where each cell represents a specific combination of predicted and true labels.

Each cell in the matrix has a specific meaning: C_{i, i} represents the number of true positives for class i, the sum of C_{i, j} for j != i represents the number of false negatives for class i, the sum of C_{j, i} for j != i represents the number of false positives for class i, and the remaining cells represent the number of true negatives for class i.

You might enjoy: Multiclass Confusion Matrix

The matrix is a [num_classes, num_classes] tensor, where num_classes is the integer specifying the number of classes.

To compute the confusion matrix, you need to provide the predictions and true labels as tensors, along with the number of classes and optional parameters like normalization mode and ignore index.

Here's a breakdown of the parameters you can pass to the multiclass_confusion_matrix function:

Important Terms

Let's break down the important terms in a confusion matrix. A True Positive (TP) is when the predicted value matches the actual value, or the predicted class matches the actual class, and the actual value was positive.

A True Negative (TN) is when the predicted value matches the actual value, or the predicted class matches the actual class, and the actual value was negative.

A False Positive (FP) occurs when the predicted value was falsely predicted, the actual value was negative, but the model predicted a positive value. This is also known as a Type I error.

Worth a look: When Should You Use a Confusion Matrix

A False Negative (FN) occurs when the predicted value was falsely predicted, the actual value was positive, but the model predicted a negative value. This is also known as a Type II error.

Here's a summary of these terms in a table:

Calculations and Examples

A confusion matrix is a table used to evaluate the performance of a classification model.

Let's say we have a model that classifies images as either cats or dogs. The model predicts 90% of the images are cats, but 10% are actually dogs.

The true positive rate (TPR) is the proportion of actual positive instances that are correctly identified. In our example, the TPR is 90%.

The false positive rate (FPR) is the proportion of actual negative instances that are incorrectly identified as positive. In our example, the FPR is 0%.

The accuracy of a model is the proportion of correct predictions. In our example, the accuracy is 90%.

The precision of a model is the proportion of true positive instances among all predicted positive instances. In our example, the precision is 90%.

If this caught your attention, see: Calculating Accuracy from Confusion Matrix

Sources

- https://lightning.ai/docs/torchmetrics/stable/classification/confusion_matrix.html

- https://www.scikit-yb.org/en/latest/api/classifier/confusion_matrix.html

- https://www.w3schools.com/python/python_ml_confusion_matrix.asp

- https://www.analyticsvidhya.com/articles/confusion-matrix-in-machine-learning/

- https://www.scikit-yb.org/zh/latest/api/classifier/confusion_matrix.html

Featured Images: pexels.com