Variational inference with normalizing flows is a powerful technique for efficient approximations. This method allows us to approximate complex probability distributions with a simpler distribution, such as a normal distribution.

Normalizing flows are a type of normalizing transformation that can be used to transform a simple distribution into a more complex one. They work by applying a series of invertible transformations to the input data.

One key benefit of normalizing flows is that they allow us to efficiently compute the log-likelihood of the data. This is because the log-likelihood of the transformed data can be computed using the log-likelihood of the simple distribution.

Normalizing flows can be used in a variety of applications, including density estimation and generative modeling.

For your interest: Normalizing Flows to Infer the Likelihood

Technical Implementation

In variational inference, we use the formulation of the marginal likelihood from a probabilistic model to perform inference, which requires marginalizing over any missing or latent variables.

To make this process tractable, we introduce an approximation to the true posterior distribution, which is a parameterized distribution over the latent variables z, where ϕ are the parameters to be optimized.

We then use Jensen’s inequality to obtain a lower bound on the marginal likelihood, known as the evidence lower bound (ELBO), which is a unified objective function that guides the optimization of the model parameters θ.

The ELBO is derived by transforming the log of an integral into an integral of a log, and simplifying this expression leads to a computationally feasible lower bound.

We can now use the ELBO as our objective function to infer our variance, but we must solve two tasks: defining a derivative of our likelihood and choosing the best posterior distribution to fit our variance.

Related reading: Bias Variance Decomposition

Families of Flows

Planar flows are a type of invertible transformation that can be used to model complex distributions. They apply linear transformations combined with non-linear activations.

Planar flows can be defined by the equation f(z) = z + uh(w^T z + b), where w, u, and b are parameters, and h is a smooth, non-linear function.

The log-determinant of the Jacobian for planar flows is given by ψ(z) = h'(w^T z + b)w, and |det ∂f/∂z| = |1 + u^T ψ(z)|.

Here are some key characteristics of planar flows:

- They apply linear transformations combined with non-linear activations.

- The log-determinant of the Jacobian can be computed efficiently.

- They are a type of invertible transformation.

Invertible Linear-time Transformations, such as affine coupling layers and autoregressive transformations, are also used to model complex distributions. These transformations can be used to ensure that data can be mapped back and forth between different spaces.

Here are some examples of invertible linear-time transformations:

- Affine coupling layers.

- Autoregressive transformations, such as Masked Autoregressive Flow (MAF) and Inverse Autoregressive Flow (IAF).

- Planar and radial flows.

Amortized

Amortized costs are a crucial aspect of technical implementation, particularly in the context of cloud computing.

In a cloud environment, amortized costs refer to the practice of spreading the costs of a resource over its useful life or a specific period of time.

This approach helps to reduce the upfront costs associated with provisioning and deploying resources.

As an example, consider a company that provisions a new server to meet peak demand. By amortizing the costs over a year, the company can spread the costs of the server across 12 months, making it more manageable.

The benefits of amortized costs include improved budgeting and forecasting, as well as reduced financial risk.

Amortized costs can also help to simplify financial reporting and accounting, making it easier to track and manage expenses.

In a cloud environment, amortized costs can be applied to a wide range of resources, including servers, storage, and databases.

Implementation

To implement our target densities, we can define them as simple functions that take a 2D point and return a scalar value. This is demonstrated in the code for the first example in the figure.

We need to implement a planar flow, which requires transforming a given sample and returning the computed log determinant of the Jacobian. A planar flow is a type of normalizing flow that can be used to solve the second problem of choosing the best posterior distribution.

To implement a planar flow, we need to define a function that can transform a given sample and return the computed log determinant of the Jacobian. This is a crucial step in the implementation process.

Here's an interesting read: Log Trick Reparameterization Trick

We can compose multiple planar flows into a single class, which can be used to sequentially apply the flows and return the summed log determinant-Jacobians. This is a key aspect of the implementation process.

The ELBO, or evidence lower bound, is a unified objective function that guides the optimization of the model parameters θ. We can use the ELBO as our objective function to infer our variance.

To obtain the ELBO, we need to transform the integral of the product of the likelihood and the prior over the latent variables into a form involving the approximate posterior. This is done using Jensen's inequality, which allows us to derive a computationally feasible lower bound.

The ELBO is composed of two components: the KL divergence between the approximate posterior and the prior, and the expected log-likelihood under the approximate posterior. We can use these components to optimize the model parameters θ.

The kinetic energy associated with the momentum variables is represented by the term 1/2ω^(T)Mω. This term is used in the derivation of the ELBO and is an important aspect of the technical implementation process.

Optimization

Optimization is a crucial step in implementing the technical aspects of our project. This involves iteratively updating the parameters using stochastic gradient descent.

To do this, we need to sample from the base distribution, which is a Gaussian distribution. This means we'll be dealing with a normal distribution of data.

We then apply a sequence of flows to the sample, which gives us the sum of log determinant Jacobians. This is a key step in understanding how the data is transformed.

The loss is evaluated using the reverse KL divergence, which is a measure of the difference between two probability distributions. This helps us understand how well our model is performing.

The gradient is computed and the parameters are updated accordingly. This is done iteratively, with each update building on the previous one.

Here's a step-by-step breakdown of the optimization process:

- Sample from the base distribution (Gaussian)

- Apply the sequence of flows to the sample and get the sum of log determinant Jacobians

- Evaluate the loss (reverse KL)

- Compute the gradient and update the parameters

Pyro Implementation

To implement a normalizing flow in Pyro, you can start by defining a simple function that represents your target density. For example, you can use a function that takes a 2-D point and returns a scalar value.

In Pyro, most learnable transforms have a corresponding helper function that constructs a neural network for the transform with the correct output shape. This neural network outputs the parameters of the transform and is known as a hypernetwork.

A planar flow, which is a type of normalizing flow, needs to be able to transform a given sample T(x) and return the computed log determinant of the Jacobian. This can be achieved by implementing a class that takes a sample and applies a sequence of planar flows to it, returning the summed log determinant-Jacobians.

By using Pyro's built-in helper functions, such as spline_coupling, you can easily create a normalizing flow and train it on your dataset. For instance, you can create a bivariate flow with a single spline coupling layer using the spline_coupling helper function.

Additional reading: Transfer Learning Enables Predictions in Network Biology

Flow-Based Generative Models

Flow-based generative models are a type of deep learning model that can be used for unsupervised learning and generative tasks. They work by transforming a simple distribution into a complex one using a series of invertible transformations.

The implementation of flow-based generative models typically involves three modules: an encoder, a flow-model, and a decoder. The encoder takes the observed input and outputs the mean and log-std of the first variable in the flow of random variables.

The flow-model is a stack of flow layers that transform the samples from the first distribution to the samples from the complex distribution. This is done using the reparameterization trick, which moves the stochasticity to samples from another standard Gaussian distribution.

In a planar flow, the parameters are λ = {z0 ∈ IRD, α ∈ IR+, β ∈ IR} and r=∣z−z0∣ is the distance from the reference point. The log-determinant of the Jacobian is given by the equation:

log-det = log(1 + α^2 r^2 / (α^2 + β^2))

The decoder takes the latent variable and models P(x|zₖ) (or the unnormalized version of it, which are sometimes called logits). It is similar to the decoder of variational auto-encoders and can be implemented as a fully connected network.

Some types of invertible linear-time transformations used in flow-based generative models include affine coupling layers, autoregressive transformations, planar flows, and radial flows. These transformations enable the modeling of complex data dependencies and multimodal distributions.

Here's an interesting read: Flow-based Generative Model

Here are some common types of invertible linear-time transformations:

Pyro Univariate Transforms

Pyro Univariate Transforms are a crucial part of Pyro's library of learnable univariate and multivariate transformations. These transforms can be used to represent and manipulate transformed distributions in Pyro.

Pyro's bijective transformations live in the pyro.distributions.transforms module, and the classes to define transformed distributions live in pyro.distributions. For example, the class ExpTransform derives from Transform and defines the forward, inverse, and log-absolute-derivative operations for the exponential transform.

Transform classes define the three operations: forward, inverse, and log-absolute-derivative. These operations are sufficient to perform sampling and scoring. The class TransformedDistribution takes a base distribution of simple noise and a list of transforms, and encapsulates the distribution formed by applying these transformations in sequence.

A transform can be composed with other transforms to produce more expressive distributions. For instance, applying an affine transformation and then an exponential transformation can produce the general log-normal distribution. This is accomplished in Pyro by passing the list of transforms to the TransformedDistribution class.

Pyro's ConditionalTransformModule provides a way to create conditional transforms, which are useful when working with conditional distributions. The conditional version of the spline transform, for example, is ConditionalSpline.

A unique perspective: Unet Conditional Normalizing Flows

Optimization

Optimization is a crucial step in variational inference with normalizing flows. We iteratively update the parameters using stochastic gradient descent.

To do this, we start by sampling from the base distribution, which is a Gaussian. This gives us a point in the space that we can work with.

We then apply the sequence of flows to this sample, which transforms it into a new point in the space. The sum of log determinant Jacobians is also calculated during this process.

The loss, or reverse KL, is then evaluated. This measures how well our current model fits the data.

The gradient of the loss is computed, and the parameters are updated using stochastic gradient descent. This process is repeated until convergence.

Here's a summary of the optimization process in a step-by-step format:

- SAMPLE from the base distribution (Gaussian)

- APPLY the sequence of flows to the sample and get the sum of log determinant Jacobians

- EVALUATE the loss (reverse KL)

- COMPUTE the gradient and UPDATE the parameters

Demonstration and Results

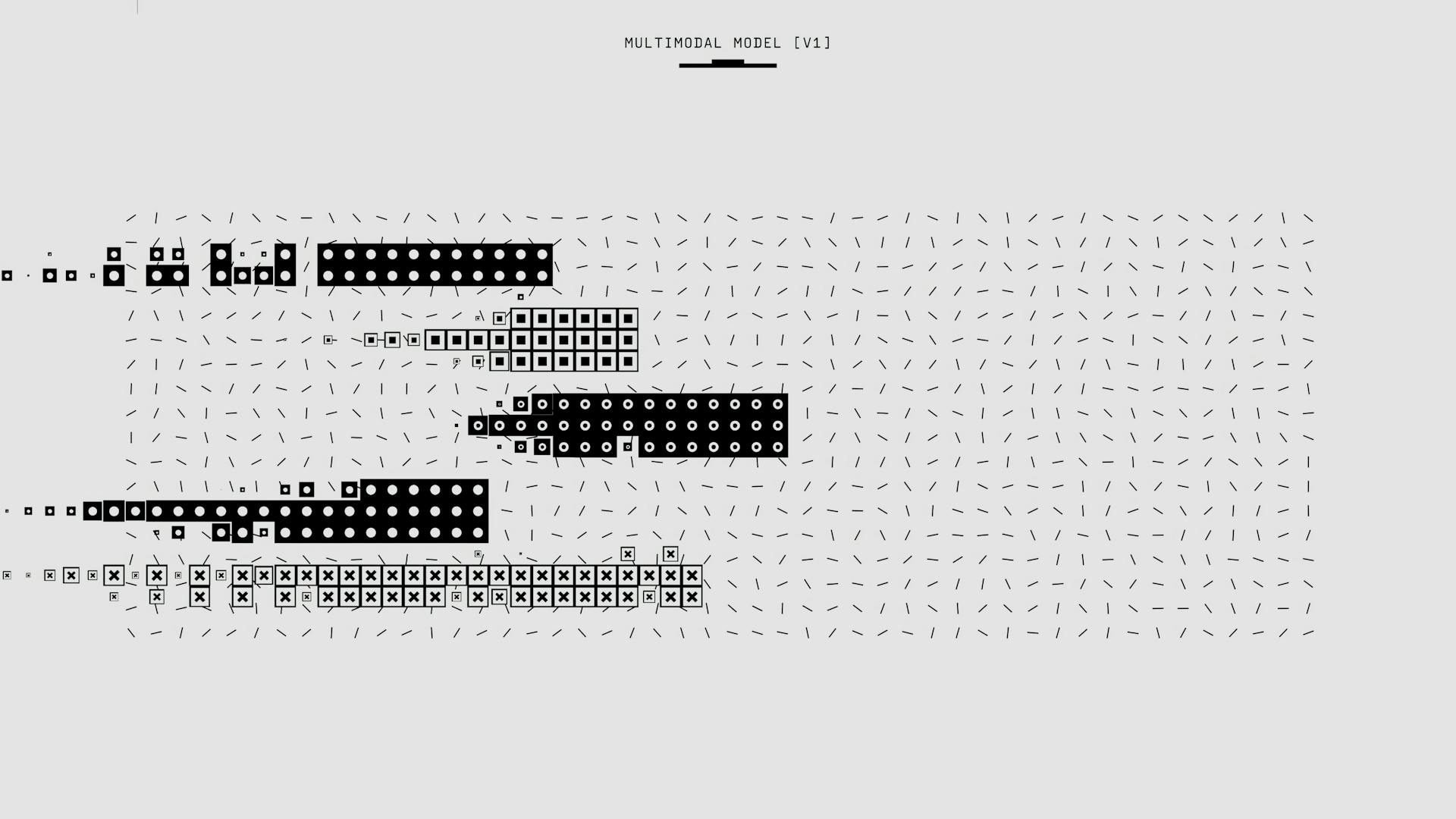

In this demonstration, we'll show you how normalizing flows can be used to generate images by learning the pixels distribution.

Normalizing flows have been successfully applied to generate images by learning the pixels distribution, as demonstrated in a specific example.

We can use normalizing flows to model the complex distribution of pixels in an image, allowing us to generate new images that closely match the original data.

In this example, normalizing flows are used to learn the pixels distribution, enabling the generation of high-quality images.

Demonstration

In this demonstration section, we'll explore how normalizing flows can be used to generate images by learning the pixels distribution.

Normalizing flows can be used to generate images by learning the pixels distribution, as shown in the example.

The demonstration highlights the capability of normalizing flows to learn the underlying distribution of pixels in an image.

By learning this distribution, normalizing flows can generate new images that resemble the original.

This is a powerful tool for generating realistic images, and it's an area of ongoing research and development.

Results

Using 32 planar flows, we can achieve impressive results after a bit of hyperparameter optimization.

Hyperparameter optimization is a crucial step in getting the best out of our model, and it involves tweaking the number of flows and learning rate to find the sweet spot.

A little bit of hyperparameter optimization can go a long way, and it's amazing what a difference it can make in the performance of our model.

After optimizing the number of flows and learning rate, we can get some really great results, and it's exciting to see the model come to life.

The results are impressive, and they show that with the right combination of hyperparameters, we can achieve some amazing things.

Take a look at this: Tuning Hyperparameters

Sources

- https://www.depthfirstlearning.com/2021/VI-with-NFs

- https://medium.com/@vitorffpires/variational-inference-and-the-method-of-normalizing-flows-to-approximate-posteriors-distributions-f7d6ada51d0f

- https://towardsdatascience.com/variational-inference-with-normalizing-flows-on-mnist-9258bbcf8810

- https://pyro.ai/examples/normalizing_flows_i.html

- http://vishakh.me/posts/normalizing_flows/

Featured Images: pexels.com